Research

Overview

How do animals process sensory information to control their motion, and how should one design sensor-based robot control systems? Answering these questions involves integrating biomechanics and dynamics with biological and engineering computation.

We study sensorimotor control of animal movement, using a “control theoretic” perspective; specifically, we use mathematical models of biomechanics, together with principles of control theory, to design perturbations. The responses to these perturbations can be used to furnish a quantitative description of the way the nervous system processes sensory information for control.

Note: we are notoriously bad at updating this page — however, the Publications are always kept much more up to date! So this is just meant as a sampler (usually of older projects–sometimes much older!)

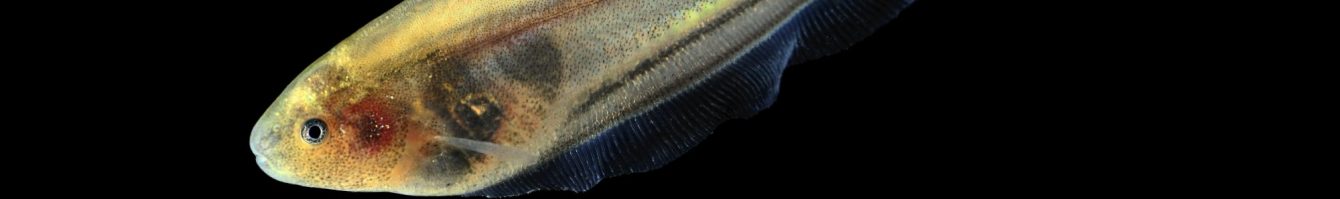

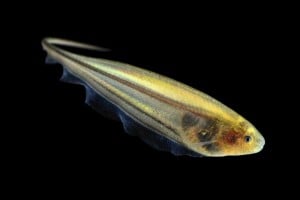

Sensorimotor Integration In Weakly Electric Fish

|

The LIMBS laboratory in close collaboration with Eric Fortune, is investigating sensorimotor integration and control in weakly electric knifefish. With the support of the National Science Foundation and the Office of Naval Research, we are investigating a combination of behaviors from locomotion control to how these animals modulate their electric output in the context of complex social interactions.

People: Prof. Noah Cowan, Prof. Eric Fortune, Debojyoti Biswas, Yu Yang, Daniel Wang, Joy Yeh, Victoria Liu, Holland Low

Alumni: Dominic Yared, Dr. Ismail Uyanik, Yuqing Eva Pan, Emily Sturm, Brittany Nixon, Kyle Yoshida, Ravikrishnan Jayakumar, Erin Sutton, Manu Madhav, Sarah Stamper, Shahin Sefati, Eatai Roth, Sean Carver, Yoni Silverman, Terrence Jao, Katie Zhuang

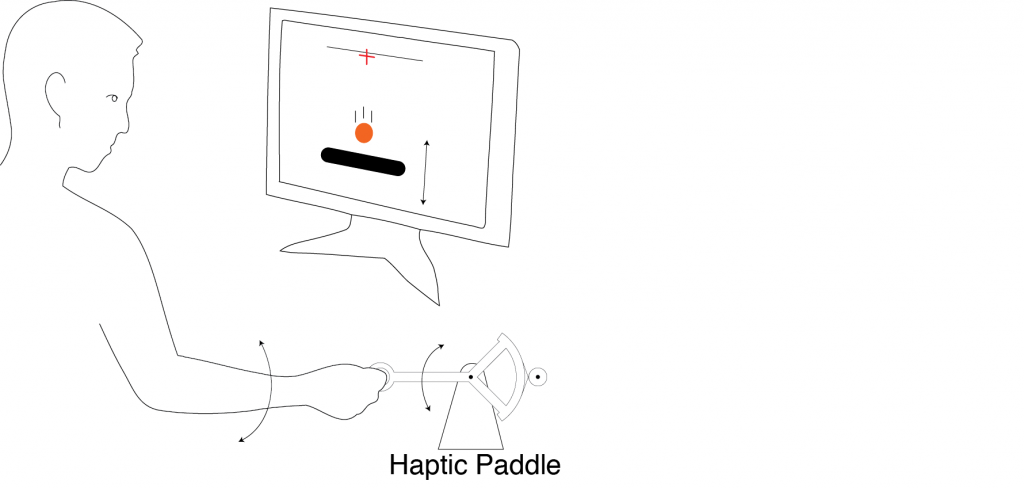

Motion Control in Persons with Cerebellar Ataxia

|

The LIMBS laboratory in close collaboration with Amy Bastian, is investigating the movement deficiencies in persons with cerebellar ataxia. With the support of the National Science Foundation, we are developing mathematical models of the visuomotor control used in upper limb tracking tasks and how this control is impacted by cerebellar ataxia.

People: Prof. Noah Cowan, Prof. Amy Bastian, Di Cao, Michael Wilkinson, Krystal Lan

Alumni: Dr. Amanda Zimmet

Somatosenory Flight Control of Bats

|

The LIMBS laboratory in close collaboration with Cynthia Moss, is investigating how bats use sensory information of airflow to control flight. With the support of the National Science Foundation and JHU Kavli NDI, we are analyzing how bats encode airflow sensory information in their somatosensory cortex and how this information is used to inform robust flight control.

People: Prof. Noah Cowan, Prof. Cynthia Moss, Michael Wilkinson, Dr. Dimitri Skandalis

Antenna-Based Tactile Sensing for High-Speed Wall Following

|

Myriad creatures rely on compliant tactile arrays for locomotion control, mapping, obstacle avoidance and object recognition. Our laboratory is “reverse engineering” the neural controller for cockroach wall following to better understand sensorimotor integration in nature. In addition, we are building tactile sensors, inspired by their biological analogs.

Human Rhythmic Movement: Control, Timekeeping, and Statistical Modeling

How do humans control rhythmic dynamic behaviors such as walking and juggling? We address this central question through a combination of virtual reality experiments, systems theoretic modeling, and computational analyses.

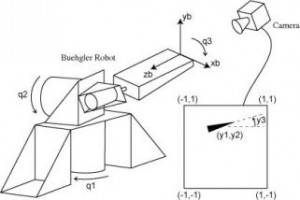

Vision-Based Control

|

Vision-based control, also known as visual servoing, is a field of robotics in which a computer controls a robot’s motion under visual guidance, much like people do in everyday life when reaching for objects or walking through a cluttered room.

People: Prof. Noah Cowan, Prof. Greg Hager

Alumni: John Swensen, Vinutha Kallem, Maneesh Dewan

Representative Publications:

V. Kallem, M. Dewan, J. P. Swensen, G. D. Hager, and N. J. Cowan. “Kernel-based visual servoing” . Proc IEEE/RSJ Int Conf Intell Robots Syst (IROS), San Diego, CA, USA, Oct 29 – Nov 2, 2007.

J.P. Swensen and N.J. Cowan. “An almost global estimator on SO(3) with measurement on S^2”. Proc Amer Control Conf, Montreal, Canada, 2012.

N. J. Cowan, J. Weingarten, and D. E. Koditschek. “Visual servoing via navigation functions.”IEEE Trans Robot and Automat, 18(4):521-533, 2002. [pdf]

N. J. Cowan and D. E. Chang. “Geometric visual servoing.” IEEE Trans Robot, 21(6):1128-1138, 2005. [pdf]